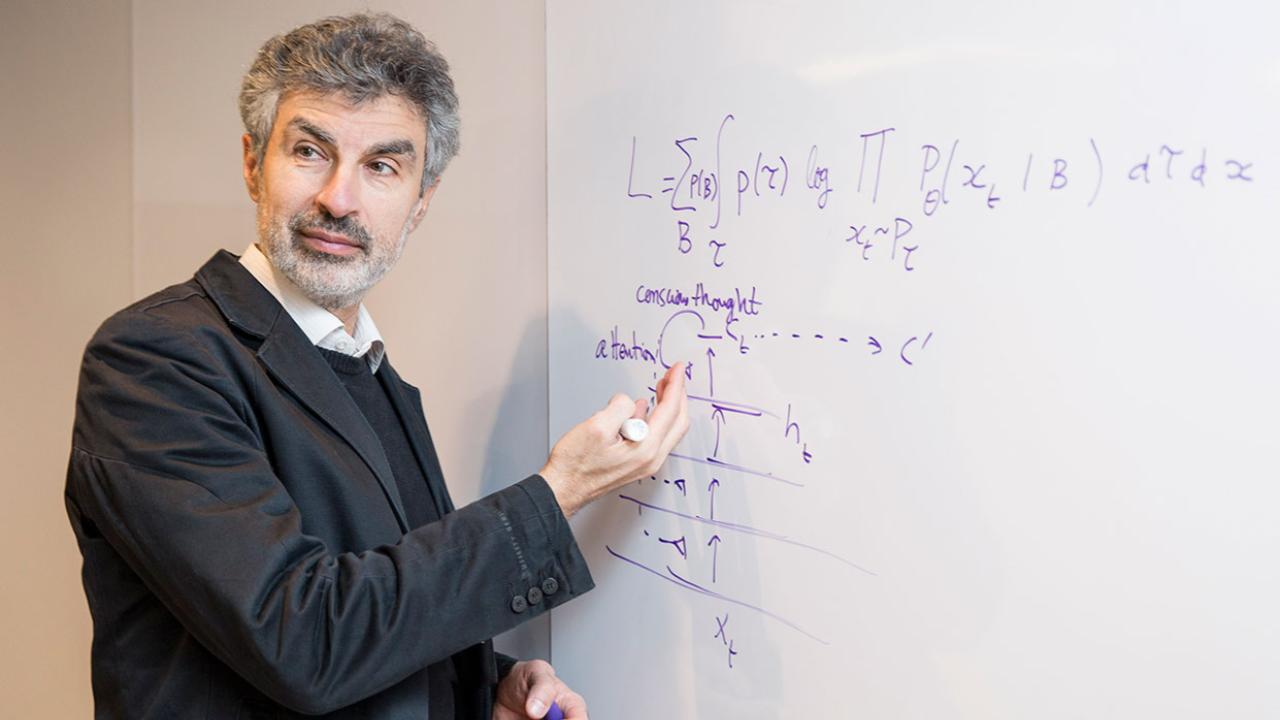

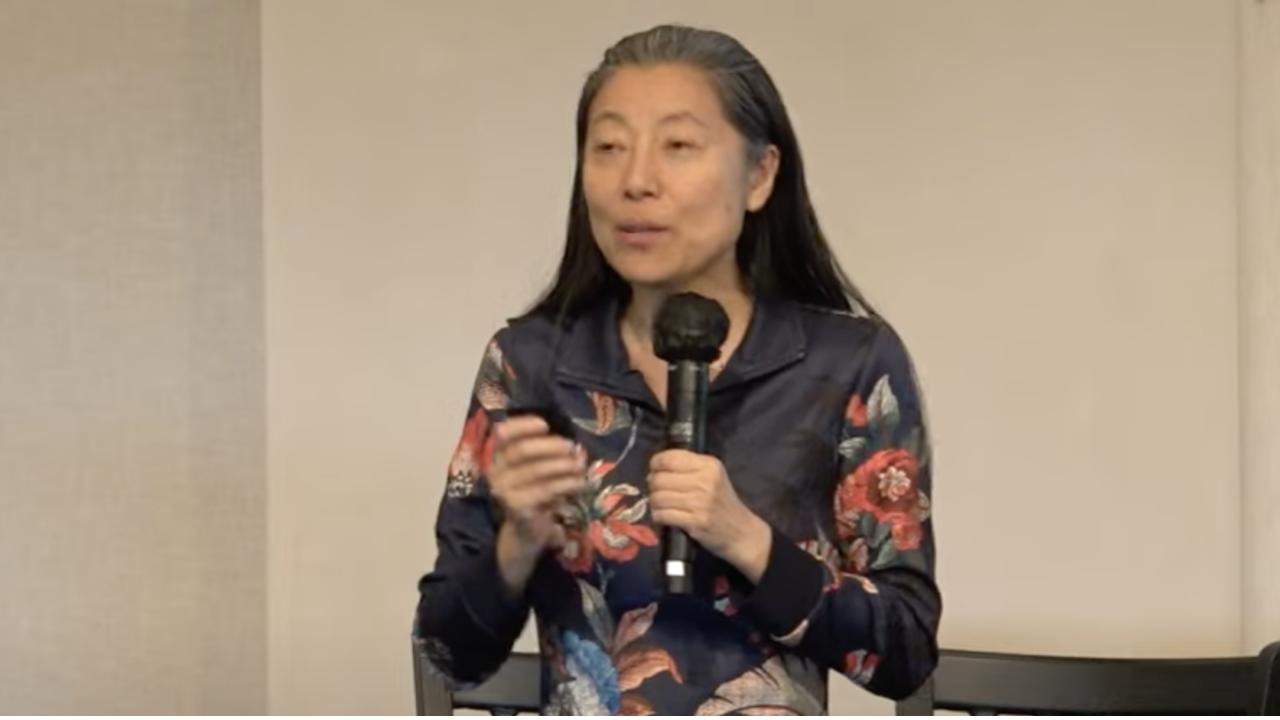

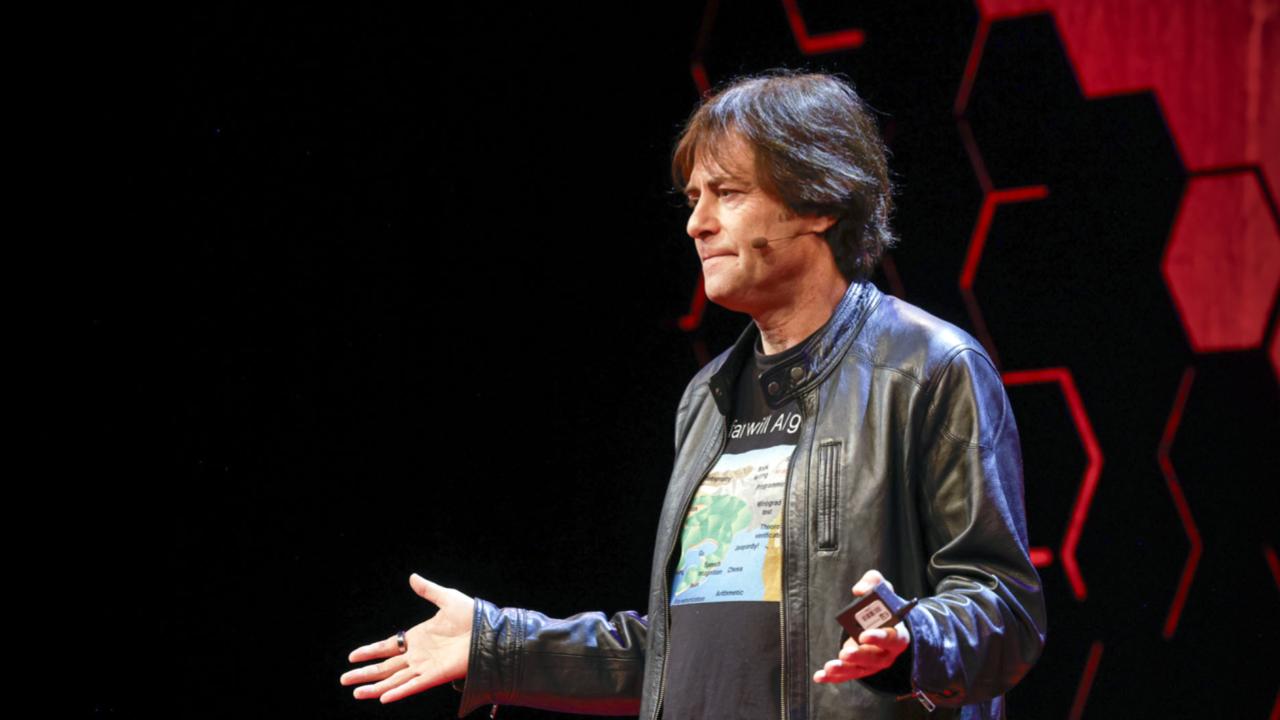

Join world-renowned AI pioneer Yoshua Bengio as he takes you on a deep dive into the fascinating world of machine learning and deep learning. In this insightful masterclass, Bengio explores the evolution of artificial intelligence, from its early days to the revolutionary breakthroughs in neural networks and deep learning.

Discover how machines learn from data, the distinction between supervised and unsupervised learning, and the crucial role of neuroscience-inspired algorithms in shaping the future of AI. Bengio also shares his personal journey—how he became immersed in AI research, the challenges he faced, and the exhilarating moments of discovery that drive scientific progress.

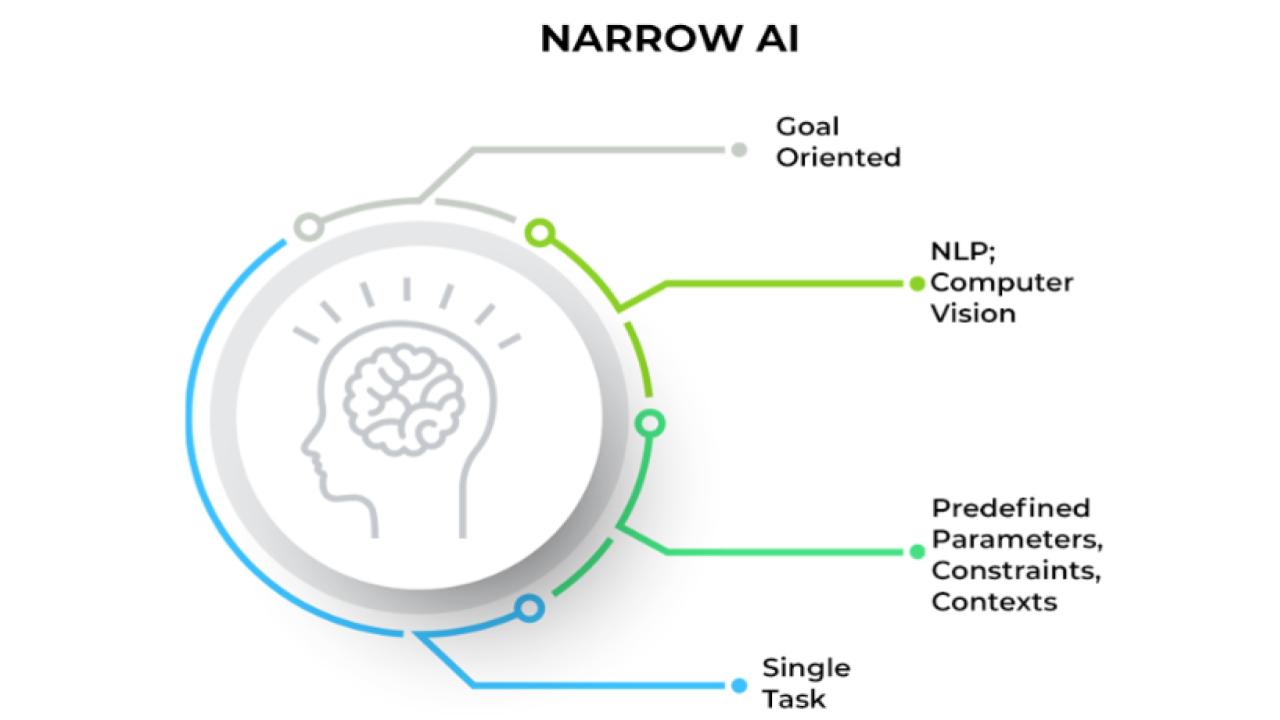

The conversation delves into the ethics of AI, the impact of deep learning on industries like computer vision, natural language processing, and robotics, and the increasing involvement of major tech companies in AI research. Bengio candidly discusses the risks and limitations of current AI models, addressing both public fears and misconceptions about artificial intelligence.

With a unique mix of technical depth and philosophical reflection, this masterclass offers a rare glimpse into the mind of one of AI’s leading thinkers. Whether you’re an AI researcher, a tech enthusiast, or simply curious about the future of intelligence, this session will leave you with a deeper understanding of how AI is transforming our world—and what lies ahead.

Topics Covered

The fundamentals of machine learning and deep learning

How AI models learn and generalize from data

The role of neuroscience in AI

The economic impact of deep learning breakthroughs

The challenges of unsupervised learning and reinforcement learning

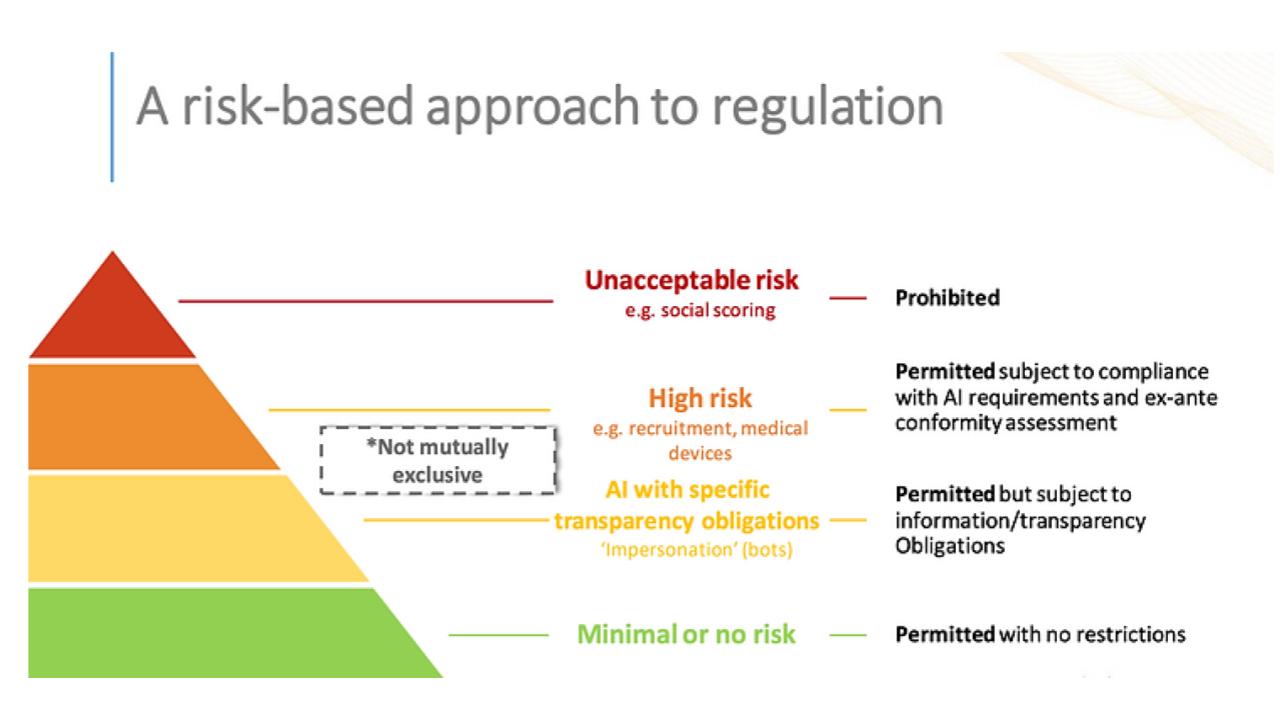

The ethical concerns and societal implications of AI

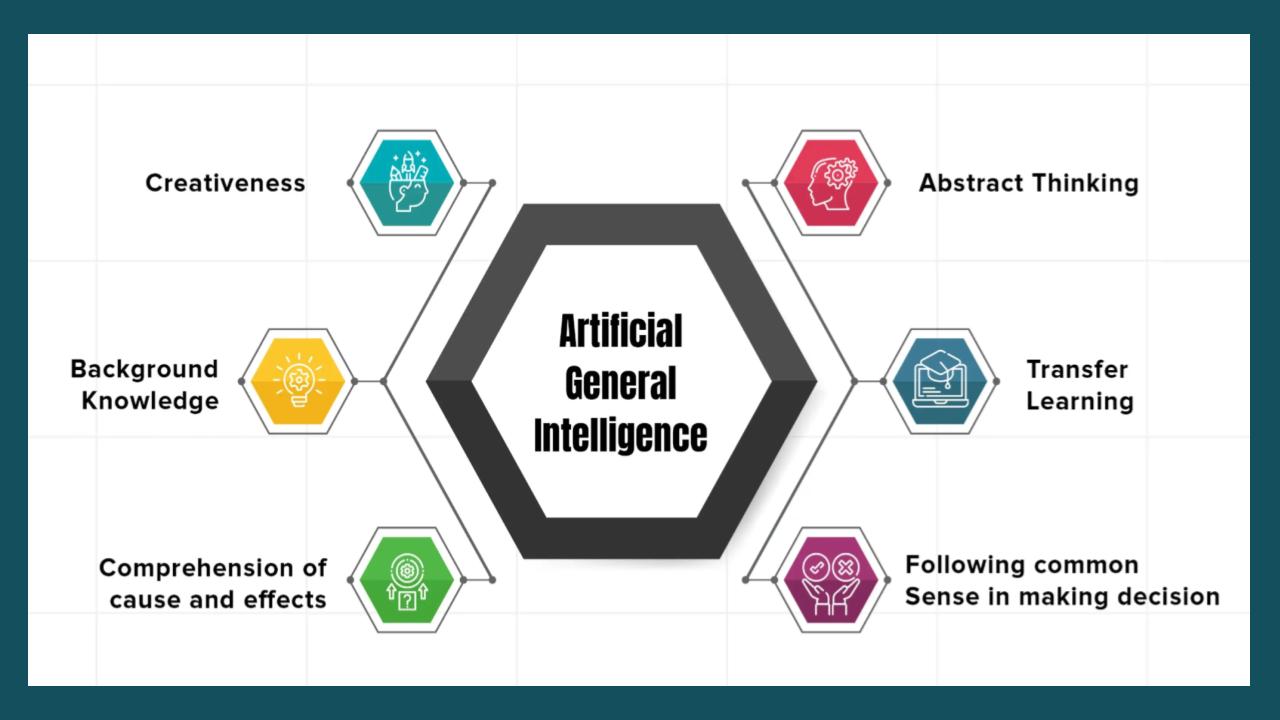

The future of human-level intelligence in machines

Timestamps

00:00:00 – Introduction: Professor Yosa Benjo and the AI Landscape

00:00:32 – Deep Learning Explained: Machine Learning Meets Neural Networks

00:01:30 – Early Research: The Connectionist Movement & Graduate Beginnings

00:02:17 – Navigating Research Paths: Balancing Clarity and Exploration

00:03:34 – Defining Intelligence: The Quest for Underlying Principles

00:05:26 – Overcoming Challenges: Credit Assignment & Deep Planning

00:07:07 – Revolutionary Applications: From Speech Recognition to Computer Vision

00:08:48 – The AI Boom: From Academic Pioneering to Industry Investment 00:12:16 – Core Concepts in AI: Thinking, Learning, and Generalization

00:14:02 – Learning in Action: Iterative Adaptation and Neural Flexibility

01:00:05 – Debunking AI Fears: Misconceptions, Autonomy, and Ethics

01:17:37 – The Creative Process: Daily Routines, Meditation, & Eureka Moments